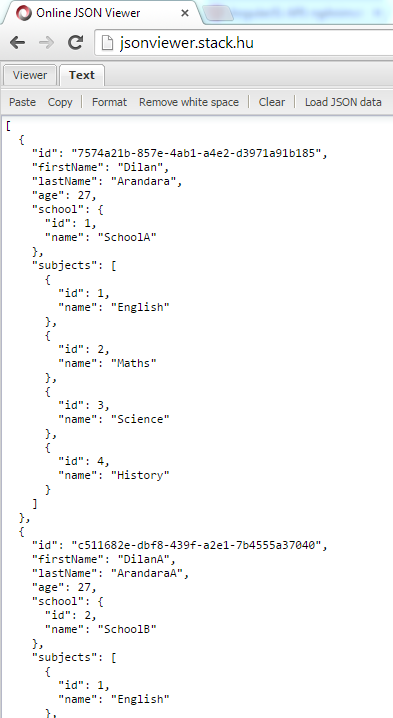

Assume you have a JSON access point and the response is like below(This is accessed via Fiddler).

Copy that JSON response and format it using a JSON viewer. In this demo I have used http://jsonviewer.stack.hu/.

Copy the code snippet as highlighted.

Create an empty class file in Visual Studio.

The you can paste the JSON code as classes like below.

The created classes will be look like below.

Thursday, November 20, 2014

Change the JSON serialization which follows the Camel Case notation

In software coding, we must follow the naming conventions. In C#, first letter of the property name should be capitalize. But in JavaScript first letter should not be capitalize.

If we create a default ASP.net Web API project, above naming conventions will be ignored.

In the following demo I will show an example how we will get a JSON response in default Web API project and how we can configure the project to have correct naming conventions.

First create a ASP.net Web API project.

Add School and Student classes to the model folder. Add an empty Web API controller called StudentsController to the controllers folder.

Content of the Student class should be like below

Content of the School class should be like below.

In the StudentsController add an Web API action method like below.

Then run the project and check it in the Fiddle.

You will see the JSON naming conventions are not there. All field names are according to the C# classes.

For the correct naming conventions we must add a new configuration to the WebAPiConfig class. You have to use CamelCasePropertyNamesContractResolver class.

Again run the project and check the response in Fiddler. Now the JSON response has correct naming conventions.

If we create a default ASP.net Web API project, above naming conventions will be ignored.

In the following demo I will show an example how we will get a JSON response in default Web API project and how we can configure the project to have correct naming conventions.

First create a ASP.net Web API project.

Add School and Student classes to the model folder. Add an empty Web API controller called StudentsController to the controllers folder.

Content of the Student class should be like below

Content of the School class should be like below.

In the StudentsController add an Web API action method like below.

Then run the project and check it in the Fiddle.

You will see the JSON naming conventions are not there. All field names are according to the C# classes.

For the correct naming conventions we must add a new configuration to the WebAPiConfig class. You have to use CamelCasePropertyNamesContractResolver class.

Again run the project and check the response in Fiddler. Now the JSON response has correct naming conventions.

Thursday, November 13, 2014

Deploy and Debug Azure WebJobs

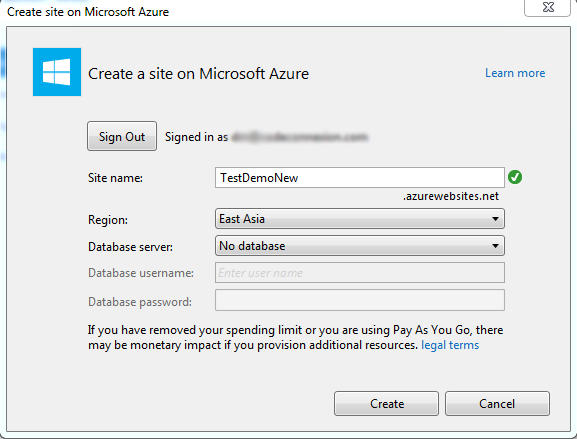

First right-click the WebClient web application project and select publish.

In the opened dialog box you have to select Microsoft Azure Web Site as the publish target.

Sign into a an Azure subscription.

I haven't create a web site for this demo in Azure. So create a new web site.

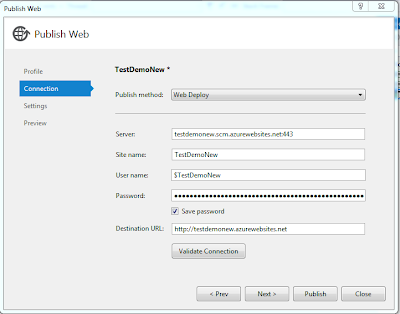

Publish the web site.

When you select the configurations, select configuration as debug. Because we will be going to debug this application using VS 2013. So that select will enable the remote debugging.

This web site will be published to the Azure and you'll be able to see that in Azure portal. WebJobs will be run from the App_Data folder of an existing WebSite. So the contents of the WebJobs will be deployed to the App_Data folder.

Log in to Windows Azure using VS 2013. Because for this demo we try to utilize most of the tools provided by Visual Studio. After that you'll be able to see the Web Site which we created recently and the WebJobs which we have deployed with that WebSite.

Azure WebJobs are using Azure Storage Queues for passing messages through queues and storing and retrieving files from Azure Blob containers, And also statistics about how and when WebJobs were executed details are stored in Azure Storage. For these things, Azure WebSite must have storage account connection strings. So using Visual Studio Server Explorer, create a new Azure Storage.

In the properties of newly created storage, you can find the connection string.

Right-click and open the Azure Web Site settings panel.

In there create new connection strings named AzureWebJobsStorage and AzureWebJobsDashboard. Paste the value of the Azure Storage connection string for the above values.

Azure Web Jobs

Azure WebJob is a light weight process for a Azure WebSite. So we must not do heavy tasks as it will run on top of the WebSite itself. WebSite is a container for WebJobs. WebJobs run outside of the WebSite and run parallel to w3wp process. Each WebJob is a separate process running in the VM. The WebJobs SDK has a binding and trigger system which works with Microsoft Azure Storage Blobs, Queues and Tables as well as Service Bus Queues.

Difference between Azure WebJob and a Azure WorkerRole is Azure WebRoles are designed for heavy tasks and WebJobs are designed for light weight tasks.

Currently there are three different ways of scheduling job in Azure WebJobs.

Difference between Azure WebJob and a Azure WorkerRole is Azure WebRoles are designed for heavy tasks and WebJobs are designed for light weight tasks.

Currently there are three different ways of scheduling job in Azure WebJobs.

- Continuous

- Scheduled

- On demand

In this demo I'll show how to create various types of WebJobs using Visual Studio 2013.

Create an ASP.net MVC project like below.

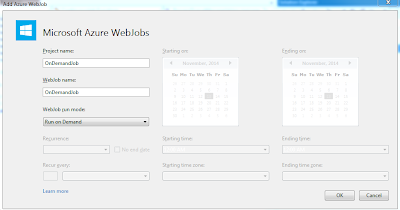

Then right click on the WebClient project, in the menu select Add and then select New Azure WebJob Project.

In the popup form, specify the WebJob name and the WebJob run mode. As I have mentioned above there are three types of WebJobs.

First we will create a Run Continuously mode and create a WebJob. This will allow the continuous WebJob to remain in memory so that it can monitor an Azure Storage Queue for incoming messages.

This will add a project to the solution like below.

In the Program.cs file, JobHost will be start up the WebJob and block it from existing. So the WebJob continuously remain in the memory.

In the Functions.cs file, there's a trigger for a AzureQueue called queue. QueueTrigger attribute is used to define a method parameter as one that will be populated when messages land on the Storage Queue being watched by the code. (There are other various attributes for monitor Queues, Blobs, or Service Bus objects.)

Create another project for Run On Demand Azure WebJob like below. These types of WebJobs will run only when a user elects to run the WebJob from within Visual Studio or from the Azure Management Portal.

In the Program.cs file, JobHost doesn't block the WebJob's EXE from exiting. Instead, it presumes a one-time run is needed, and then the WebJob simply exits. And also according to the code this WebJob will run a method called ManualTrigger.

In the Functions.cs file, ManualTrigger methods has defined. There a method attribute called NoAutomaticTrigger which indicates a function for which no automatic trigger listening is performed.

Wednesday, March 19, 2014

Big Data

One of the biggest IT trends in last few years has been 'Big Data'.

"Big data is the term for a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications"

Data management has became an important competency in all kind of organizations. Lots of leading companies in the world are making strategies to examine how they can transform their business using Big Data.

There are three main terms which we have to consider in Big Data arena.

"Big data is the term for a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications"

Data management has became an important competency in all kind of organizations. Lots of leading companies in the world are making strategies to examine how they can transform their business using Big Data.

There are three main terms which we have to consider in Big Data arena.

- Volume - Have terabytes of data to be examine.

- Variety - Not only numbers. Have to consider about geospatial data, 3D data, audio and video, and unstructured text, including log files and social media

- Velocity - Massive amount of data which generates real time, even in micro seconds.

According to GigaOM following are the things happen in the ream of Big Data.

- Hadoop is becoming a true platform.

- Artificial intelligence is finally becoming something of a reality.

- New tools are making it possible for ordinary people to use analytics.

- Big data and cloud computing are intersecting in a major way.

- The legal system will attempt to develop and impose new rules.

As IT professions we must be aware whats going on with Big Data. J

Sunday, March 9, 2014

ASP.Net Web API with BSON

BSON is a binary serialization format. "BSON" stands for "Binary JSON", but BSON and JSON are serialized very differently. BSON is "JSON-like", because objects are represented as name-value pairs, similar to JSON. Unlike JSON, numeric data types are stored as bytes, not strings. BSON is mainly used as a data storage and network transfer format in the MongoDB database.

According to ASP.Net Web API 2.1 release;

Native clients, such as .NET client apps, can benefit from using BSON in place of text-based formats such as JSON or XML. For browser clients, you will probably want to stick with JSON, because JavaScript can directly convert the JSON payload.

Advantage of WEB API is, we can have content negotiation. So the client can select in which format does he need the data.

Create ASP.net Web API project. Update ASP.net Web API nuget packages.

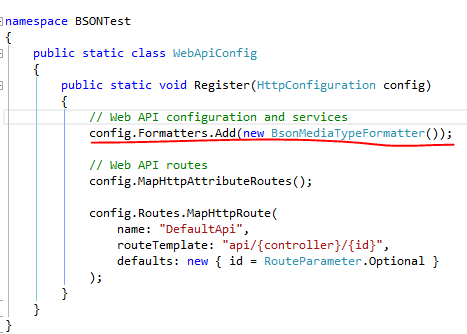

In WebApiConfig file add BsonMediaTypeFormatter. Now if the client requests "application/bson", Web API will use BSON formatting as the response.

Add a simple class called Student.

Add a api controller called StudentController and change the Index method like below.

Using fiddle compose a json message like below.

And the response will be like;

Now set the accept header as application/bson.

Now the response will be like;

In general speaking, if your service returns more binary, numeric and non textual data, then BSON is the best thing to use.

According to ASP.Net Web API 2.1 release;

- The BSON was designed to be lightweight, easy to scan, and fast to encode/decode.

- BSON is comparable in size to JSON. Depending on the data, a BSON payload may be smaller or larger than a JSON payload. For serializing binary data, such as an image file, BSON is smaller than JSON, because the binary data does is not base64-encoded.

- BSON documents are easy to scan because elements are prefixed with a length field, so a parser can skip elements without decoding them.

- Encoding and decoding are efficient, because numeric data types are stored as numbers, not strings.

Native clients, such as .NET client apps, can benefit from using BSON in place of text-based formats such as JSON or XML. For browser clients, you will probably want to stick with JSON, because JavaScript can directly convert the JSON payload.

Advantage of WEB API is, we can have content negotiation. So the client can select in which format does he need the data.

Create ASP.net Web API project. Update ASP.net Web API nuget packages.

Saturday, March 8, 2014

Windows Azure Queue Storage

In my previous post I explained about Windows Azure Storage and how to create it via Azure portal. In this post I'll explain about Azure Queue storage.

Windows Azure Queue storage is a service for storing large numbers of messages that can be accessed from anywhere in the world via authenticated calls using HTTP or HTTPS.

If you are going to use development storage you may have to consider about azure storage client library version and emulator version. When I was using development storage version 2.2 with Azure storage client library version 3, I got some errors related to invalid HTTP headers and 400 Bad request issues. Please go through this link if you are going to use development storage.

Add new cloud project and add web role and a worker role.

Expand server explorer, you can see the azure storage explorer.

Add QueueStorage connection string to the web role cloud service config file.

Add following code to Home controller.

You must consider following naming conventions for Azure Queues.

Now we'll look at how to consume queue messages from worker role.

Add following code to worker role.

After you call queue.GetMesage(), that message becomes invisible. That means no one allows to read that message. At the end you can delete the message. But if that process crashed after a few time message again visible in queue. That means there is an invisibility time for a queue message. You can further read about Poison Message handling in Queues.

Windows Azure Queue storage is a service for storing large numbers of messages that can be accessed from anywhere in the world via authenticated calls using HTTP or HTTPS.

If you are going to use development storage you may have to consider about azure storage client library version and emulator version. When I was using development storage version 2.2 with Azure storage client library version 3, I got some errors related to invalid HTTP headers and 400 Bad request issues. Please go through this link if you are going to use development storage.

Add new cloud project and add web role and a worker role.

- A queue name must start with a letter or number, and can only contain letters, numbers, and the dash (-) character.

- The first and last letters in the queue name must be alphanumeric. The dash (-) character cannot be the first or last character. Consecutive dash characters are not permitted in the queue name.

- All letters in a queue name must be lowercase.

- A queue name must be from 3 through 63 characters long.

Now we'll look at how to consume queue messages from worker role.

Add following code to worker role.

Subscribe to:

Posts (Atom)